ICCV 2019

Guided Image-to-Image Translation with Bi-Directional Feature Transformation

Badour AlBahar Jia-Bin Huang

Abstract

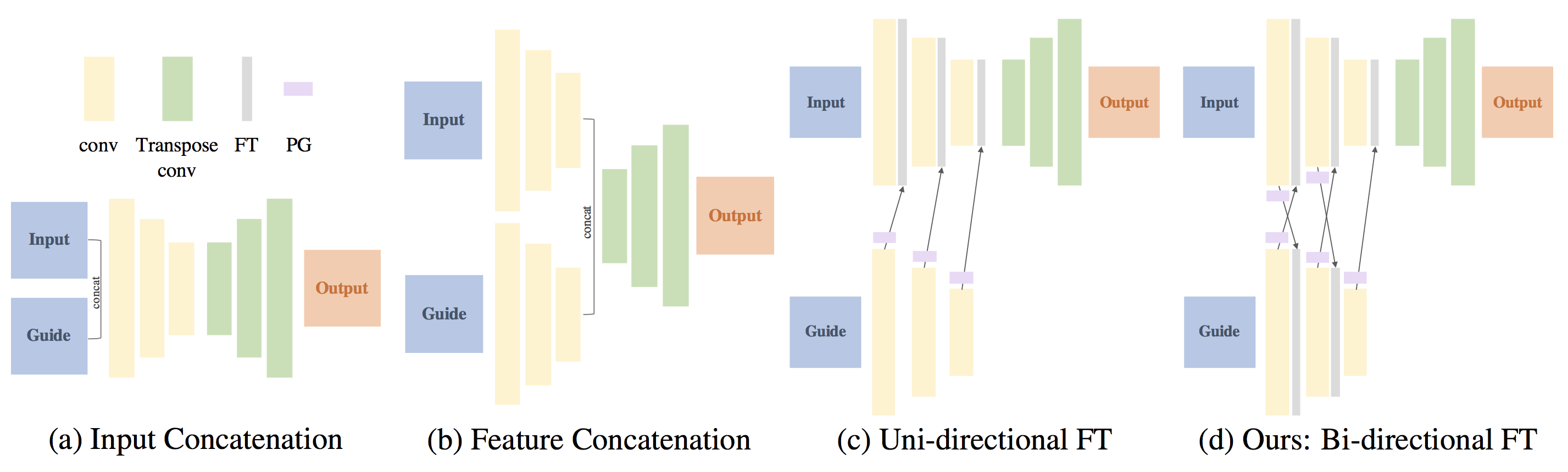

We address the problem of guided image-to-image translation where we translate an input image into another while respecting the constraints provided by an external, user-provided guidance image. Various types of conditioning mechanisms for leveraging the given guidance image have been explored, including input concatenation, feature concatenation, and conditional affine transformation of feature activations. All these conditioning mechanisms, however, are uni-directional, i.e., no information flow from the input image back to the guidance. To better utilize the constraints of the guidance image, we present a bi-directional feature transformation (bFT) scheme. We show that our novel bFT scheme outperforms other conditioning schemes and has comparable results to state-of-the-art methods on different tasks.

Conditioning Schemes

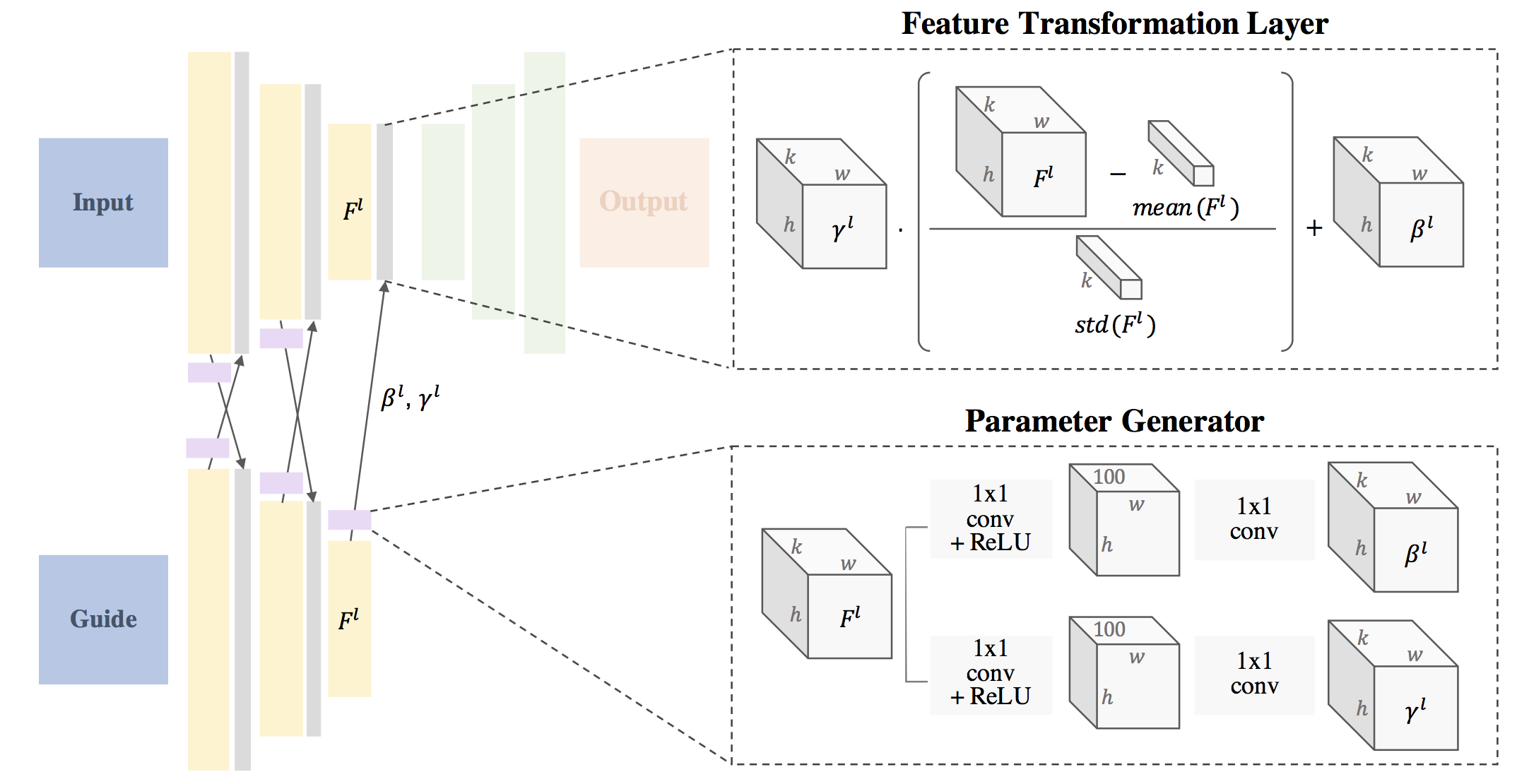

Bi-directional Feature Transformation (bFT)

We present a bi-directional feature transformation model to better utilize the additional guidance for guided image-to-image translation problems. In place of every normalization layer in the encoder, we add our novel feature transformation layer. This layer scales and shifts the normalized feature of that layer in a spatially varying manner. The scaling and shifting parameters are generated using a parameter generation model of two convolution layers with a bottleneck of 100 dimension.

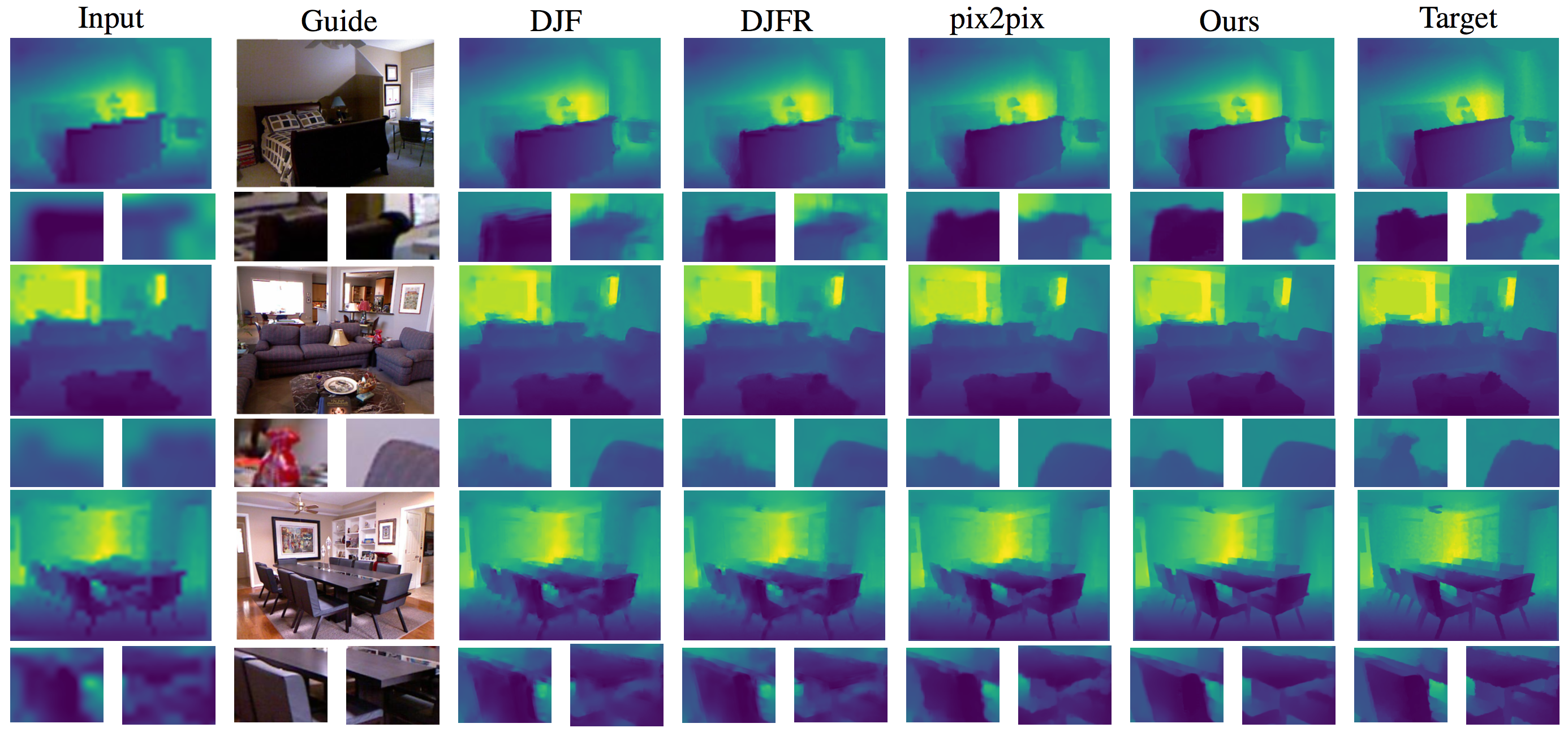

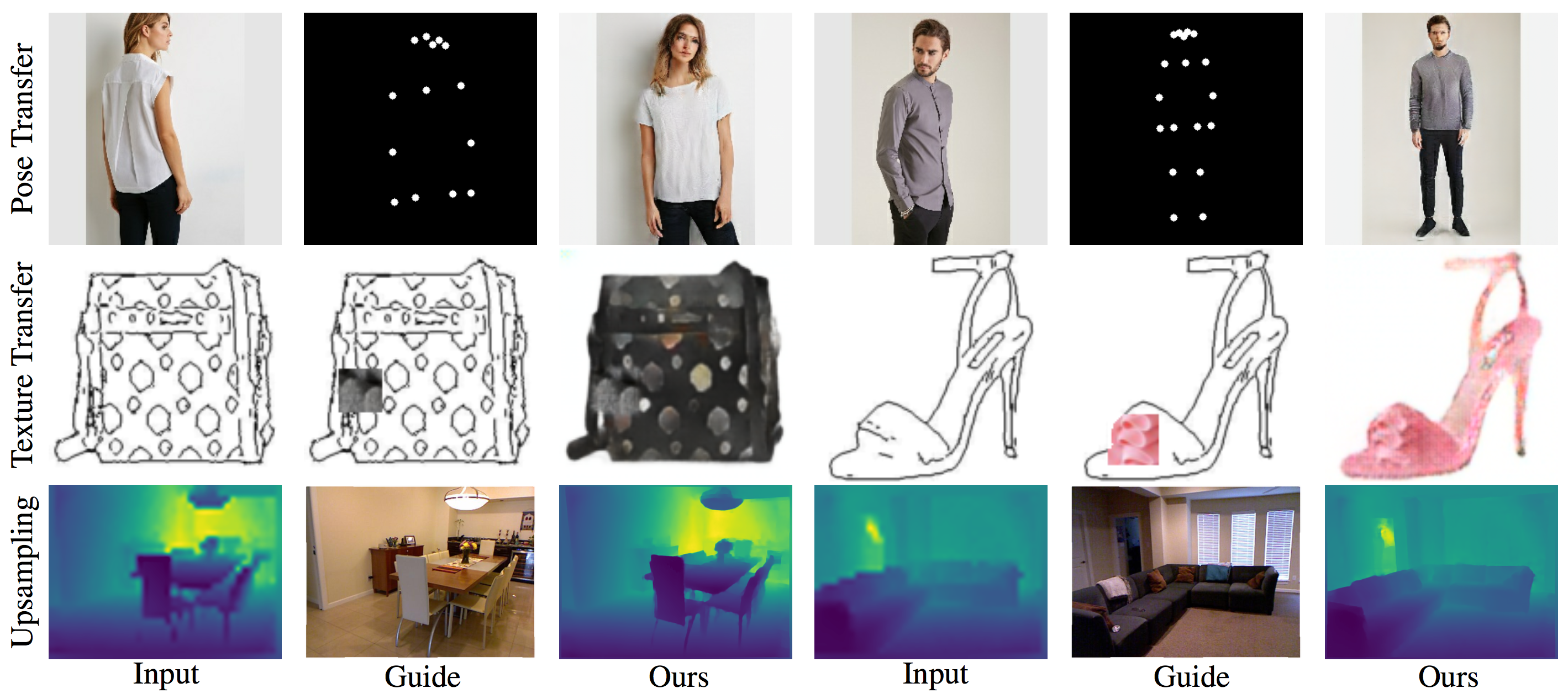

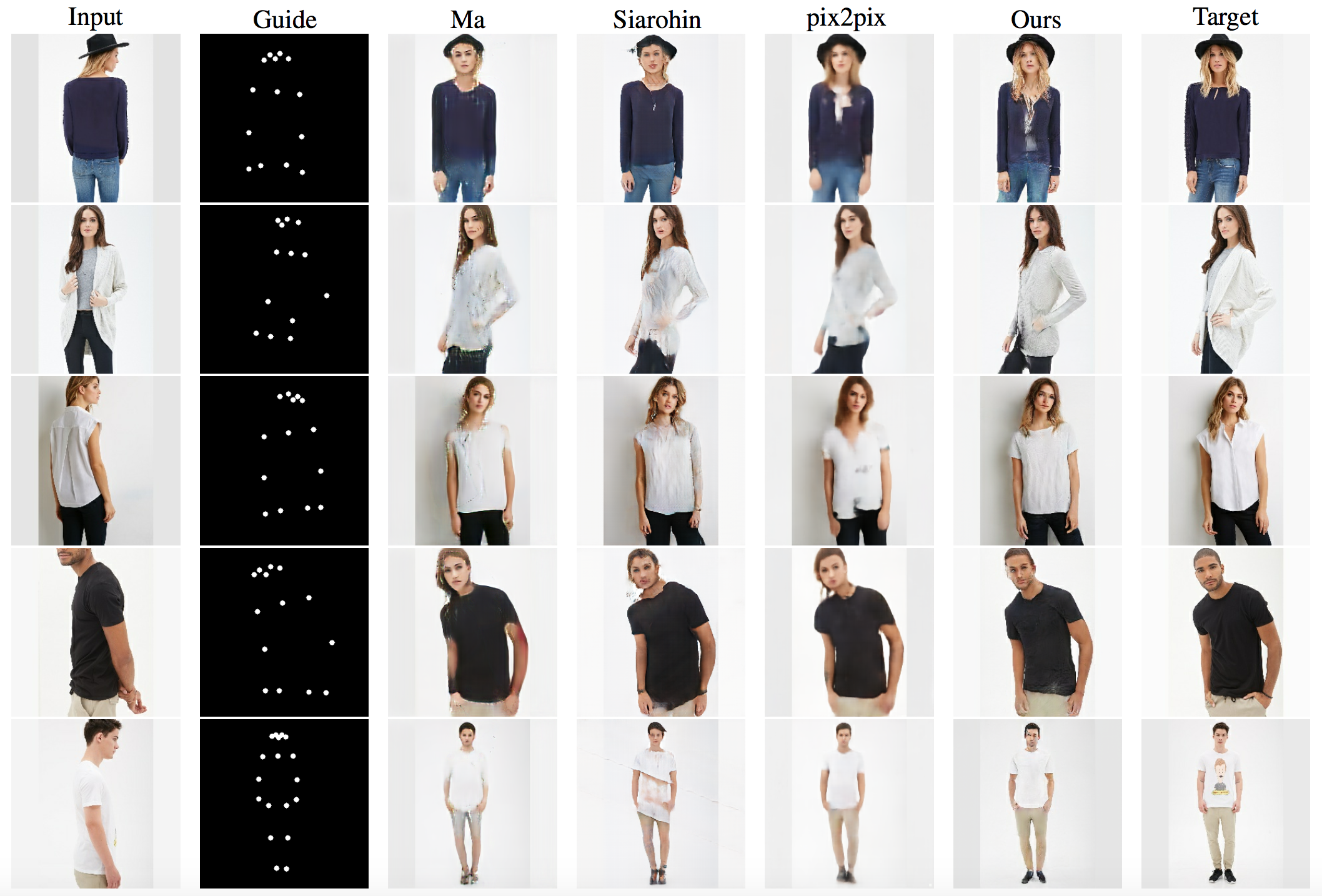

Qualitative Comparisons

Texture Transfer

Pose Transfer

Depth Upsampling